共计 32152 个字符,预计需要花费 81 分钟才能阅读完成。

网上的资料一大堆都是很早以前版本的教程,干脆自己写一个最新版的。

此处有一定要强调,类似环境的安装一定要去官网看说明,不然你本地哗哗哗搞定程序一跑报错,懵逼了

Downloading

Get Spark from the downloads page of the project website. This documentation is for Spark version 2.3.0. Spark uses Hadoop’s client libraries for HDFS and YARN. Downloads are pre-packaged for a handful of popular Hadoop versions. Users can also download a “Hadoop free” binary and run Spark with any Hadoop version by augmenting Spark’s classpath. Scala and Java users can include Spark in their projects using its Maven coordinates and in the future Python users can also install Spark from PyPI.

If you’d like to build Spark from source, visit Building Spark.

Spark runs on both Windows and UNIX-like systems (e.g. Linux, Mac OS). It’s easy to run locally on one machine — all you need is to have javainstalled on your system PATH, or the JAVA_HOME environment variable pointing to a Java installation.

此处是重点啊,scala现在版本已经到2.12了,spark使用了2.11编译的,博主就在这栽了跟头

Spark runs on Java 8+, Python 2.7+/3.4+ and R 3.1+. For the Scala API, Spark 2.3.0 uses Scala 2.11. You will need to use a compatible Scala version (2.11.x).

Note that support for Java 7, Python 2.6 and old Hadoop versions before 2.6.5 were removed as of Spark 2.2.0. Support for Scala 2.10 was removed as of 2.3.0.

scala

安装scala-2.11.12版本即可

spark

Choose a Spark release:

Choose a package type:

Download Spark: spark-2.3.0-bin-hadoop2.7.tgz

Verify this release using the 2.3.0 signatures and checksums and project release KEYS.

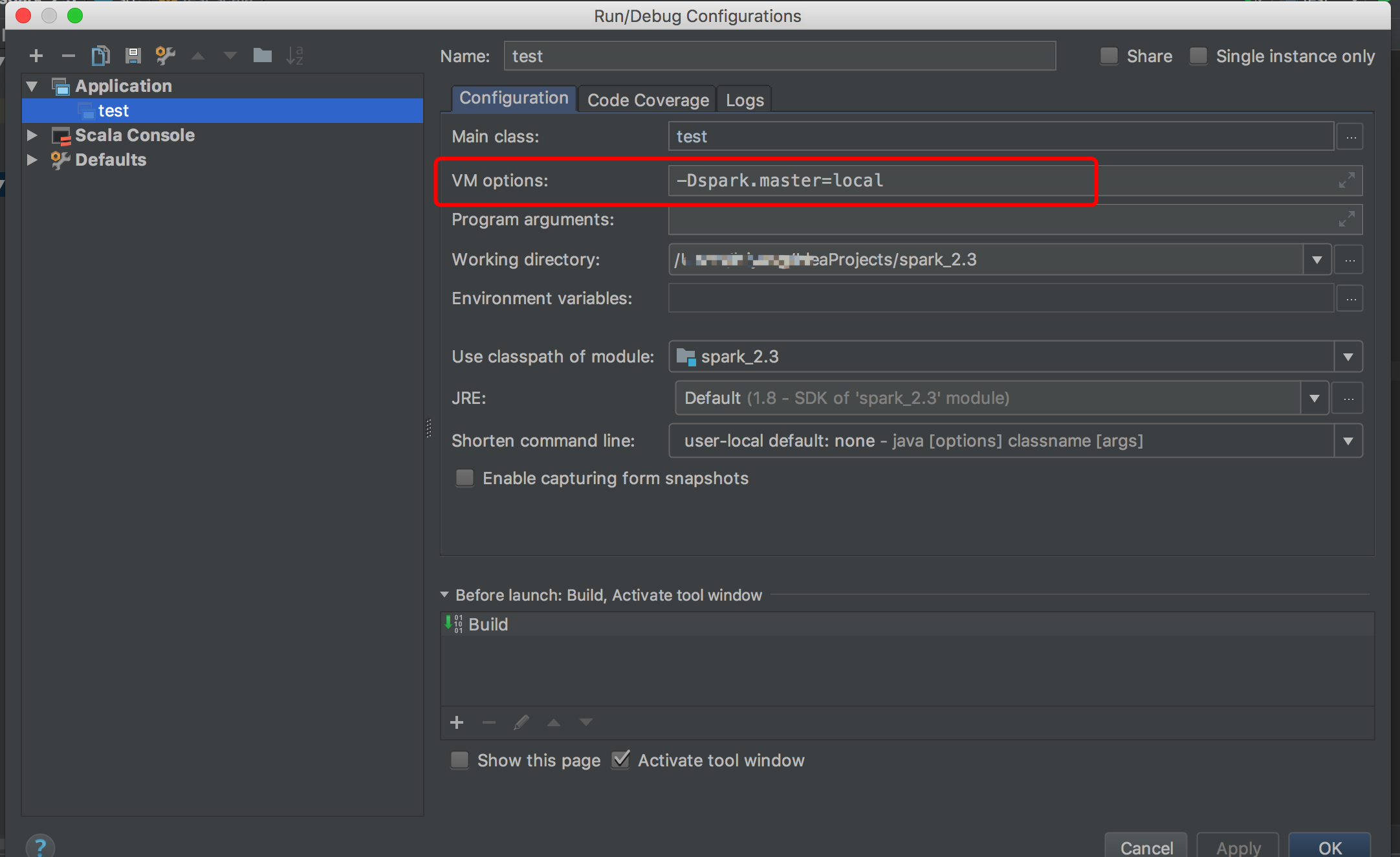

idea设置

我们要设置程序运行的时候是使用单机模式来运行,所有需要设置run的参数

idea设置如下

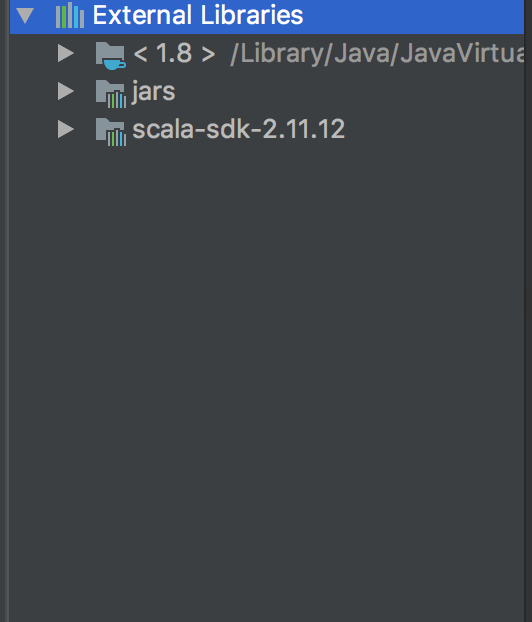

libs设置,jar里面都是spark的jar包

运行测试代码

上面基本上配置好了开发环境,下面跑一段代码测试一下

import scala.math.random

import org.apache.spark.{SparkConf,SparkContext}

object test {

def main(args: Array[String]) {

val conf= new SparkConf().setAppName("test")

val sc=new SparkContext(conf)

val slices = if (args.length > 0) args(0).toInt else 2

val n = math.min(100000L * slices, Int.MaxValue).toInt // avoid overflow

val count = sc.parallelize(1 until n, slices).map { i =>

val x = random * 2 - 1

val y = random * 2 - 1

if (x*x + y*y <= 1) 1 else 0

}.reduce(_ + _)

println(s"Pi is roughly ${4.0 * count / (n - 1)}")

}

}输出结果大致如下,恭喜你配置成功啦!撸起袖子开干吧!

/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/bin/java -Dspark.master=local "-javaagent:/Applications/IntelliJ IDEA CE.app/Contents/lib/idea_rt.jar=54452:/Applications/IntelliJ IDEA CE.app/Contents/bin" -Dfile.encoding=UTF-8 -classpath /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/charsets.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/deploy.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/cldrdata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/dnsns.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/jaccess.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/jfxrt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/localedata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/nashorn.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/sunec.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/sunjce_provider.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/sunpkcs11.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/zipfs.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/javaws.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jce.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jfr.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jfxswt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jsse.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/management-agent.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/plugin.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/resources.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/rt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/ant-javafx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/dt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/javafx-mx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/jconsole.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/packager.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/sa-jdi.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/tools.jar:/Users/leiyang/IdeaProjects/spark_2.3/out/production/spark_2.3:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/xz-1.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jta-1.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jpam-1.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/guice-3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/ivy-2.4.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/oro-2.0.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/ST4-4.0.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/avro-1.7.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/core-1.1.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/gson-2.2.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hppc-0.7.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/snappy-0.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/antlr-2.7.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jsp-api-2.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/okio-1.13.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/opencsv-2.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/py4j-0.10.6.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/xmlenc-0.52.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/base64-2.3.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/guava-14.0.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/janino-3.0.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jets3t-0.9.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jetty-6.1.26.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jline-2.12.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jsr305-1.3.9.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/log4j-1.2.17.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/minlog-1.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/okhttp-3.8.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/stream-2.7.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/generex-1.0.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jdo-api-3.0.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/objenesis-2.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/paranamer-2.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/pyrolite-4.13.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/scalap-2.11.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/avro-ipc-1.7.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-io-2.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/httpcore-4.4.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/JavaEWAH-0.3.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/javax.inject-1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jaxb-api-2.2.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/libfb303-0.9.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/lz4-java-1.4.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/snakeyaml-1.15.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/stax-api-1.0-2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/stax-api-1.0.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/aopalliance-1.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-cli-1.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-net-2.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/derby-10.12.1.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/joda-time-2.9.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jodd-core-3.5.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/libthrift-0.9.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/super-csv-2.2.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/zookeeper-3.4.6.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/activation-1.1.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/automaton-1.11-8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/chill-java-0.8.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/chill_2.11-0.8.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-dbcp-1.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-lang-2.6.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/httpclient-4.5.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/javolution-5.5.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/slf4j-api-1.7.16.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/xercesImpl-2.9.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/zjsonpatch-0.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/zstd-jni-1.3.2-2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/aircompressor-0.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/antlr-runtime-3.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-lang3-3.5.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/guice-servlet-3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-auth-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-hdfs-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hk2-api-2.4.0-b34.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-xc-1.9.13.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jetty-util-6.1.26.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jtransforms-2.4.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/kryo-shaded-3.0.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/metrics-jvm-3.1.5.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/netty-3.9.9.Final.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spire_2.11-0.13.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/antlr4-runtime-4.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/api-util-1.0.0-M20.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/arrow-format-0.8.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/arrow-memory-0.8.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/arrow-vector-0.8.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/breeze_2.11-0.13.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-codec-1.10.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-pool-1.5.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/compress-lzf-1.0.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-core-2.6.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/leveldbjni-all-1.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/metrics-core-3.1.5.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/metrics-json-3.1.5.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/bcprov-jdk15on-1.58.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-math3-3.4.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-client-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-common-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hk2-utils-2.4.0-b34.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/java-xmlbuilder-1.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/javassist-3.18.1-GA.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jersey-guava-2.22.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jul-to-slf4j-1.7.16.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/protobuf-java-2.5.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/snappy-java-1.1.2.6.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/bonecp-0.8.0.RELEASE.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-crypto-1.0.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-digester-1.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/curator-client-2.7.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-jaxrs-1.9.13.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jersey-client-2.22.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jersey-common-2.22.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jersey-server-2.22.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/machinist_2.11-0.6.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/parquet-column-1.8.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/parquet-common-1.8.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/parquet-format-2.3.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/parquet-hadoop-1.8.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/RoaringBitmap-0.5.11.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/scala-library-2.11.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/scala-reflect-2.11.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/scala-xml_2.11-1.0.5.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/shapeless_2.11-2.3.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/slf4j-log4j12-1.7.16.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-sql_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/stringtemplate-3.2.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-logging-1.1.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/curator-recipes-2.7.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-yarn-api-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hive-cli-1.2.1.spark2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hk2-locator-2.4.0-b34.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/javax.ws.rs-api-2.0.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jcl-over-slf4j-1.7.16.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/orc-core-1.4.1-nohive.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/parquet-jackson-1.8.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/scala-compiler-2.11.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-core_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-hive_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-repl_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-tags_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-yarn_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/xbean-asm5-shaded-4.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/api-asn1-api-1.0.0-M20.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-compiler-3.0.8.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-compress-1.4.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-httpclient-3.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hive-exec-1.2.1.spark2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hive-jdbc-1.2.1.spark2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/javax.inject-2.4.0-b34.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/json4s-ast_2.11-3.2.11.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/kubernetes-model-2.0.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/metrics-graphite-3.1.5.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/netty-all-4.1.17.Final.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/parquet-encoding-1.8.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-mesos_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-mllib_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/apacheds-i18n-2.0.0-M15.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/arpack_combined_all-0.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-beanutils-1.7.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/curator-framework-2.7.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/datanucleus-core-3.2.10.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/datanucleus-rdbms-3.2.9.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-core-asl-1.9.13.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/javax.servlet-api-3.1.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/json4s-core_2.11-3.2.11.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/kubernetes-client-3.0.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/macro-compat_2.11-1.1.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-graphx_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-sketch_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-unsafe_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/univocity-parsers-2.5.9.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-annotations-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-yarn-client-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-yarn-common-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-databind-2.6.7.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/javax.annotation-api-1.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jersey-media-jaxb-2.22.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-kvstore_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spire-macros_2.11-0.13.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/avro-mapred-1.7.7-hadoop2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/breeze-macros_2.11-0.13.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-collections-3.2.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-configuration-1.6.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/datanucleus-api-jdo-3.2.6.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hive-beeline-1.2.1.spark2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-annotations-2.6.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-mapper-asl-1.9.13.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/logging-interceptor-3.8.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-catalyst_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-launcher_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/apache-log4j-extras-1.2.17.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/eigenbase-properties-1.1.5.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/flatbuffers-1.2.0-3f79e055.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/json4s-jackson_2.11-3.2.11.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/orc-mapreduce-1.4.1-nohive.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-streaming_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/validation-api-1.1.0.Final.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hive-metastore-1.2.1.spark2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/mesos-1.4.0-shaded-protobuf.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/osgi-resource-locator-1.0.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/parquet-hadoop-bundle-1.6.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-kubernetes_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/commons-beanutils-core-1.8.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/htrace-core-3.1.0-incubating.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-mllib-local_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/calcite-core-1.2.0-incubating.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-dataformat-yaml-2.6.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-module-paranamer-2.7.9.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/calcite-linq4j-1.2.0-incubating.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-yarn-server-common-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jersey-container-servlet-2.22.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-network-common_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/aopalliance-repackaged-2.4.0-b34.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/calcite-avatica-1.2.0-incubating.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-network-shuffle_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/apacheds-kerberos-codec-2.0.0-M15.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-mapreduce-client-app-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-module-scala_2.11-2.6.7.1.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-mapreduce-client-core-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-yarn-server-web-proxy-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/spark-hive-thriftserver_2.11-2.3.0.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/scala-parser-combinators_2.11-1.0.4.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-mapreduce-client-common-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jersey-container-servlet-core-2.22.2.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-mapreduce-client-shuffle-2.7.3.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/jackson-module-jaxb-annotations-2.6.7.jar:/Users/leiyang/spark-2.3.0-bin-hadoop2.7/jars/hadoop-mapreduce-client-jobclient-2.7.3.jar:/Users/leiyang/scala-2.11.12/lib/scala-actors-2.11.0.jar:/Users/leiyang/scala-2.11.12/lib/scala-actors-migration_2.11-1.1.0.jar:/Users/leiyang/scala-2.11.12/lib/scala-library.jar:/Users/leiyang/scala-2.11.12/lib/scala-parser-combinators_2.11-1.0.4.jar:/Users/leiyang/scala-2.11.12/lib/scala-reflect.jar:/Users/leiyang/scala-2.11.12/lib/scala-swing_2.11-1.0.2.jar:/Users/leiyang/scala-2.11.12/lib/scala-xml_2.11-1.0.5.jar test

objc[9533]: Class JavaLaunchHelper is implemented in both /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/bin/java (0x10ef054c0) and /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/libinstrument.dylib (0x10ff884e0). One of the two will be used. Which one is undefined.

2018-03-17 15:36:15 INFO SparkContext:54 - Running Spark version 2.3.0

2018-03-17 15:36:16 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-03-17 15:36:16 INFO SparkContext:54 - Submitted application: test

2018-03-17 15:36:16 INFO SecurityManager:54 - Changing view acls to: leiyang

2018-03-17 15:36:16 INFO SecurityManager:54 - Changing modify acls to: leiyang

2018-03-17 15:36:16 INFO SecurityManager:54 - Changing view acls groups to:

2018-03-17 15:36:16 INFO SecurityManager:54 - Changing modify acls groups to:

2018-03-17 15:36:16 INFO SecurityManager:54 - SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(leiyang); groups with view permissions: Set(); users with modify permissions: Set(leiyang); groups with modify permissions: Set()

2018-03-17 15:36:21 INFO Utils:54 - Successfully started service 'sparkDriver' on port 54455.

2018-03-17 15:36:21 INFO SparkEnv:54 - Registering MapOutputTracker

2018-03-17 15:36:21 INFO SparkEnv:54 - Registering BlockManagerMaster

2018-03-17 15:36:21 INFO BlockManagerMasterEndpoint:54 - Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2018-03-17 15:36:21 INFO BlockManagerMasterEndpoint:54 - BlockManagerMasterEndpoint up

2018-03-17 15:36:21 INFO DiskBlockManager:54 - Created local directory at /private/var/folders/44/5cw_lq6s549182mqj_dg74j00000gn/T/blockmgr-1aede004-903f-455c-ba6d-a1bba08c2fc8

2018-03-17 15:36:21 INFO MemoryStore:54 - MemoryStore started with capacity 912.3 MB

2018-03-17 15:36:21 INFO SparkEnv:54 - Registering OutputCommitCoordinator

2018-03-17 15:36:21 INFO log:192 - Logging initialized @12724ms

2018-03-17 15:36:22 INFO Server:346 - jetty-9.3.z-SNAPSHOT

2018-03-17 15:36:22 INFO Server:414 - Started @12835ms

2018-03-17 15:36:22 INFO AbstractConnector:278 - Started ServerConnector@3e2822{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2018-03-17 15:36:22 INFO Utils:54 - Successfully started service 'SparkUI' on port 4040.

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@1568159{/jobs,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@31024624{/jobs/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@25bcd0c7{/jobs/job,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@63cd604c{/jobs/job/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@40dd3977{/stages,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3a4e343{/stages/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6a1d204a{/stages/stage,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6fff253c{/stages/stage/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6c6357f9{/stages/pool,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@591e58fa{/stages/pool/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@3954d008{/storage,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2f94c4db{/storage/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@593e824f{/storage/rdd,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@72ccd81a{/storage/rdd/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@6d8792db{/environment,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@64bc21ac{/environment/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@493dfb8e{/executors,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@5d25e6bb{/executors/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@ce5a68e{/executors/threadDump,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@9d157ff{/executors/threadDump/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@2f162cc0{/static,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@15a902e7{/,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@7876d598{/api,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@4985cbcb{/jobs/job/kill,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@72f46e16{/stages/stage/kill,null,AVAILABLE,@Spark}

2018-03-17 15:36:22 INFO SparkUI:54 - Bound SparkUI to 0.0.0.0, and started at http://10.33.100.83:4040

2018-03-17 15:36:22 INFO Executor:54 - Starting executor ID driver on host localhost

2018-03-17 15:36:22 INFO Utils:54 - Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 54456.

2018-03-17 15:36:22 INFO NettyBlockTransferService:54 - Server created on 10.33.100.83:54456

2018-03-17 15:36:22 INFO BlockManager:54 - Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2018-03-17 15:36:22 INFO BlockManagerMaster:54 - Registering BlockManager BlockManagerId(driver, 10.33.100.83, 54456, None)

2018-03-17 15:36:22 INFO BlockManagerMasterEndpoint:54 - Registering block manager 10.33.100.83:54456 with 912.3 MB RAM, BlockManagerId(driver, 10.33.100.83, 54456, None)

2018-03-17 15:36:22 INFO BlockManagerMaster:54 - Registered BlockManager BlockManagerId(driver, 10.33.100.83, 54456, None)

2018-03-17 15:36:22 INFO BlockManager:54 - Initialized BlockManager: BlockManagerId(driver, 10.33.100.83, 54456, None)

2018-03-17 15:36:22 INFO ContextHandler:781 - Started o.s.j.s.ServletContextHandler@71154f21{/metrics/json,null,AVAILABLE,@Spark}

2018-03-17 15:36:23 INFO SparkContext:54 - Starting job: reduce at test.scala:14

2018-03-17 15:36:23 INFO DAGScheduler:54 - Got job 0 (reduce at test.scala:14) with 2 output partitions

2018-03-17 15:36:23 INFO DAGScheduler:54 - Final stage: ResultStage 0 (reduce at test.scala:14)

2018-03-17 15:36:23 INFO DAGScheduler:54 - Parents of final stage: List()

2018-03-17 15:36:23 INFO DAGScheduler:54 - Missing parents: List()

2018-03-17 15:36:23 INFO DAGScheduler:54 - Submitting ResultStage 0 (MapPartitionsRDD[1] at map at test.scala:10), which has no missing parents

2018-03-17 15:36:23 INFO MemoryStore:54 - Block broadcast_0 stored as values in memory (estimated size 1768.0 B, free 912.3 MB)

2018-03-17 15:36:24 INFO MemoryStore:54 - Block broadcast_0_piece0 stored as bytes in memory (estimated size 1167.0 B, free 912.3 MB)

2018-03-17 15:36:24 INFO BlockManagerInfo:54 - Added broadcast_0_piece0 in memory on 10.33.100.83:54456 (size: 1167.0 B, free: 912.3 MB)

2018-03-17 15:36:24 INFO SparkContext:54 - Created broadcast 0 from broadcast at DAGScheduler.scala:1039

2018-03-17 15:36:24 INFO DAGScheduler:54 - Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at test.scala:10) (first 15 tasks are for partitions Vector(0, 1))

2018-03-17 15:36:24 INFO TaskSchedulerImpl:54 - Adding task set 0.0 with 2 tasks

2018-03-17 15:36:24 INFO TaskSetManager:54 - Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7853 bytes)

2018-03-17 15:36:24 INFO Executor:54 - Running task 0.0 in stage 0.0 (TID 0)

2018-03-17 15:36:24 INFO Executor:54 - Finished task 0.0 in stage 0.0 (TID 0). 824 bytes result sent to driver

2018-03-17 15:36:24 INFO TaskSetManager:54 - Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7853 bytes)

2018-03-17 15:36:24 INFO Executor:54 - Running task 1.0 in stage 0.0 (TID 1)

2018-03-17 15:36:24 INFO TaskSetManager:54 - Finished task 0.0 in stage 0.0 (TID 0) in 202 ms on localhost (executor driver) (1/2)

2018-03-17 15:36:24 INFO Executor:54 - Finished task 1.0 in stage 0.0 (TID 1). 824 bytes result sent to driver

2018-03-17 15:36:24 INFO TaskSetManager:54 - Finished task 1.0 in stage 0.0 (TID 1) in 38 ms on localhost (executor driver) (2/2)

2018-03-17 15:36:24 INFO TaskSchedulerImpl:54 - Removed TaskSet 0.0, whose tasks have all completed, from pool

2018-03-17 15:36:24 INFO DAGScheduler:54 - ResultStage 0 (reduce at test.scala:14) finished in 0.727 s

2018-03-17 15:36:24 INFO DAGScheduler:54 - Job 0 finished: reduce at test.scala:14, took 0.873671 s

Pi is roughly 3.141915709578548

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 23

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 6

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 16

2018-03-17 15:36:24 INFO SparkContext:54 - Invoking stop() from shutdown hook

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 7

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 14

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 22

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 2

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 19

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 18

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 5

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 21

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 9

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 20

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 11

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 4

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 12

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 13

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 0

2018-03-17 15:36:24 INFO ContextCleaner:54 - Cleaned accumulator 24

2018-03-17 15:36:24 INFO AbstractConnector:318 - Stopped Spark@3e2822{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2018-03-17 15:36:24 INFO SparkUI:54 - Stopped Spark web UI at http://10.33.100.83:4040

2018-03-17 15:36:24 INFO BlockManagerInfo:54 - Removed broadcast_0_piece0 on 10.33.100.83:54456 in memory (size: 1167.0 B, free: 912.3 MB)

2018-03-17 15:36:24 INFO MapOutputTrackerMasterEndpoint:54 - MapOutputTrackerMasterEndpoint stopped!

2018-03-17 15:36:24 INFO MemoryStore:54 - MemoryStore cleared

2018-03-17 15:36:24 INFO BlockManager:54 - BlockManager stopped

2018-03-17 15:36:24 INFO BlockManagerMaster:54 - BlockManagerMaster stopped

2018-03-17 15:36:24 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint:54 - OutputCommitCoordinator stopped!

2018-03-17 15:36:24 INFO SparkContext:54 - Successfully stopped SparkContext

2018-03-17 15:36:24 INFO ShutdownHookManager:54 - Shutdown hook called

2018-03-17 15:36:24 INFO ShutdownHookManager:54 - Deleting directory /private/var/folders/44/5cw_lq6s549182mqj_dg74j00000gn/T/spark-f8656983-0b80-4364-8b35-99bd4f2282e5

Process finished with exit code 0